| |||||||||||||||||||||||

| Site Index | |||||||||||||||||||||||

|

Overview | Results | Publications | Software | People | References

Overview:

There exist several applications of sensor networks where reliability of data delivery can be critical. Examples include critical civilian and military applications such as detection of harmful gases and detection of a moving target as well as delivery of queries and control software to so called re-configurable sensors, which are sensors that can operate in one of the several possible modes of operation. While the redundancy inherent in a sensor network might increase the degree of reliability, it by no means can provide any strict reliability semantics. In this project, we focus on the problem of delivering information efficiently with strict reliability semantics. Currently our work focuses on the reliability from the sink to the sensors (downstream) in a sensor field. We identify different types of downstream reliability semantics that might be required by sensor applications, for delivery of both queries and larger pieces of information such as code segments for re-configurable sensors.

We propose a generic framework called GARUDA that is highly scalable, dynamically self-configurable, and instantaneously constructible. It leverages the unique characteristics of sensor network environments effectively, thus incurring low overheads. We show that GARUDA can flexibly support the different classes of reliability semantics. Through ns2 based simulations, we evaluate the GARUDA framework with that of existing approaches, and show that it performs significantly better over a wide variety of network conditions.

To further reduce the overheads associated with reliable data

delivery, we study the problem of congestion in sensor networks. We focus on

providing congestion control from the sink to the sensors in a

sensor field. We identify the different reasons for congestion

from the sink to the sensors and show the uniqueness of the

problem in sensor network environments. We propose a scalable,

distributed approach called CONSISE that addresses congestion from the sink to

the sensors in a sensor network.

Results / Status:

GARUDA RESULTS

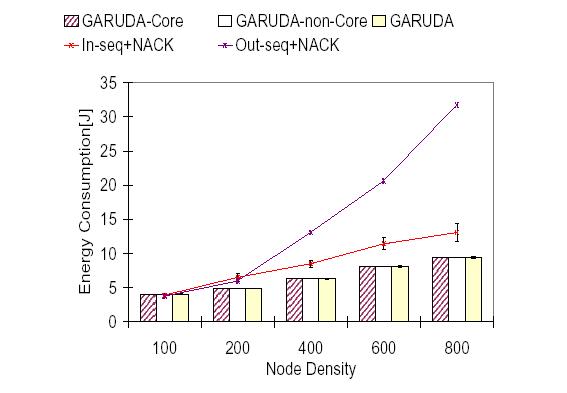

In Figures 1, 2, 3 and 4 we show the performance of the GARUDA framework and compare them against basic reliabilty schemes. For the single packet reliable delivery, we compare the performance to that of an ACK-based scheme, which uses ACK feedback for packet delivery along with a retransmission timeout. For multiple packet delivery, we compare the GARUDA framework with both in-sequence and delivery and out-of-sequence delivery mechanisms, which use a NACK-based scheme. The simulation results are obtained using the ns-2 network simulator. We use a topology where (a) 100 nodes are placed in a grid to ensure connectivity, while the remaining nodes are randomly deployed in 600m X 600m square area, and the sink node is located at the center of one of the edges of the square, (b) transmission range of each node is 67m, (c) channel bit rate is 1 Mbps, and (d) each message consists of 100 packets (except for the single packet delivery part), and the size of packet is 1 Kbytes.

|

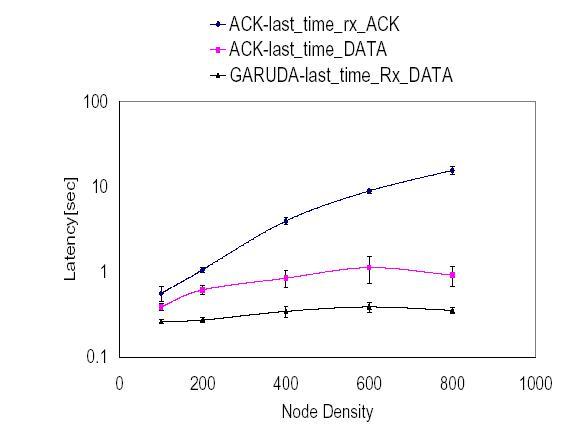

| Figure 1 |

|---|

In Figure 1, we compare the latencies of the GARUDA scheme and the ACK-based scheme for single packet reliable delivery. The latency of the GARUDA scheme is significantly smaller because of the two radio approach as it uses an implicit NACK scheme. This means that there are no explicit NACKs sent to the sender of a packet if a packet is not received, thus not increasing the load in the network.We also see that the latency scales well to the increase in the number of nodes because of the same reason. However, in the ACK based scheme, the latency is appreciably higher because every ACK is addressed to the sender node and the sender retransmits if it has not received the ACK from a particular node. The ACKs themselves increase the traffic in the network causing additional congestion related losses.

|

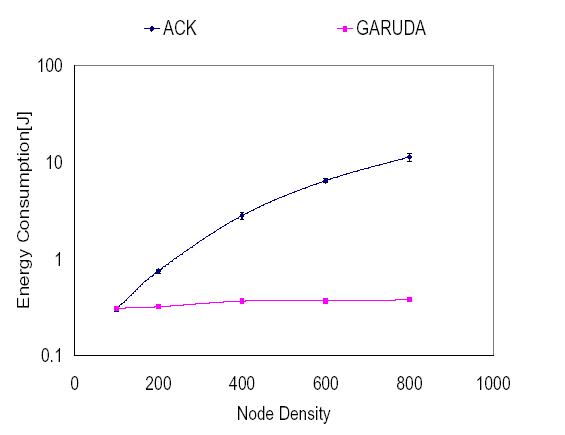

| Figure 2 |

|---|

The energy consumed per node in joules for both the schemes for single packet reliability are shown in Figure 2. The energy consumed per node is significantly smaller for the GARUDA scheme than the ACK based scheme even though it uses an additional radio. This is because of two reasons. Firstly, the total number of transmissions on the first radio is significantly smaller for the GARUDA framework. Infact, it shows a linear increase with increasing number of nodes. Since the total number of transmissions is directly proportional to the total number of receptions, the total energy consumed per node for the first radio increases only slightly with increasing node density. Secondly, the second radio is just a busy-tone radio to indicate if the node has received the packet or not thus requiring significantly lesser power.

|

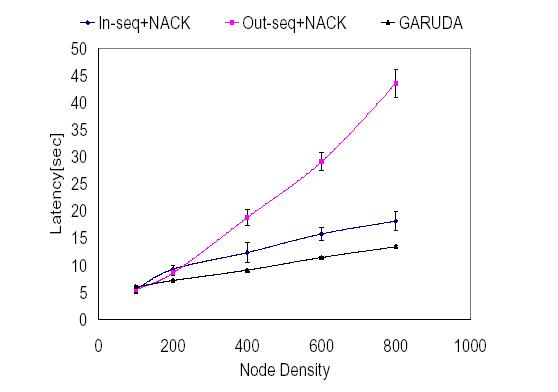

| Figure 3 |

|---|

Figure 3 compares the latencies of the GARUDA framework and two other NACK-based schemes for multiple packet reliability. The GARUDA framework has significantly lower latencies compared to the other two schemes when the node density is increased. The reasons for reduced latencies are two-fold: the advantage gained by having a designated server as opposed to a non-designated which reduces the amount of data sent and the advantage gained by using out-of sequence forwarding but without the NACK implosion problem.

|

| Figure 4 |

|---|

The energy consumed per node for all three schemes for multiple packet reliability is shown in Figure 4. The average energy consumed per node is significantly smaller for the GARUDA case when compared to the other two cases. The average energy consumed for all three cases is directly proportional to the number of transmissions, which is the sum of the number of requests sent sent and the number of data sent per node (apart from the energy consumed for the first packet). The sum of the number of request and data sent is the least for the GARUDA scheme meaning that the energy consumed per node is also the least for this scheme.

CONSISE RESULTS

The environment for all scenarios comprises of 800 nodes. Depending on whether there are 1, 2 or 4 sinks, the number of nodes for each of these sinks are assigned to be 800, 400 and 200 respectively. Among these nodes, the first 100 nodes are assigned in a grid to ensure connectivity. The remaining 700, 300 and 100 nodes for each of the sinks in the 1, 2 and 4 sink case respectively are randomly deployed in 600mx600m square area with the sinks being located on the edge of the squares on opposite ends. The channel rate and the transmission range are the same as before while the message consists of 50 packets and is transmitted by all the sinks simultaneously.

|

|

| Figure 5 |

|---|

The latency as a function of the converged sending rate is presented in figure 5 with and without the proposed congestion control implemented for 1, 2 and 4 sinks. CONSISE is able to mitigate the effects of congestion significantly better for all three values of sinks as it is able to adjust the sending rate to the available bandwidth, incurring minimal loses. For all three values of sinks, the sending rate in the scheme with CONSISE is brought down to the rate corresponding to the minimum latency and hence reduces to a single point.

|

|

| Figure 6 |

|---|

Figure 6 shows the number of retransmitted data sent by CONSISE and the basic scheme for different number of sinks. CONSISE has significantly lesser number of retransmissions than the basic schemes at high sending rates. This is mainly because the proposed congestion control scheme adapts to available network bandwidth without overwhelming the network. The rate adaptation mechanism pro-actively prevents any retransmission unless it is sure that the local channel can support the transmission without a loss.

Publications & Presentations:

- R. Vedantham, S.-J. Park and R. Sivakumar,

``Sink-to-Sensors Congestion Control. ''

to appear in IEEE International Conference on Communications (ICC), Seoul, Korea, May 2005. - S.-J. Park, R. Vedantham, R. Sivakumar and I.F. Akyildiz,

``A Scalable Approach to Reliable Downstream Data Delivery in Wireless Sensor Networks.''

Regular paper in Proceedings of ACM International Symposium on Mobile Ad Hoc Networking and Computing (MOBIHOC), Roppongi, Japan, May 2004. - S.-J. Park, R. Vedantham and R. Sivakumar,

``Downstream Reliability in Wireless Sensor Networks''

GNAN Technical Report, July 2003. - S.-J. Park and R. Sivakumar,

``Sink-to-Sensors Reliability in Sensor Networks''

Extended Abstract in Proceedings of ACM International Symposium on Mobile Ad Hoc Networking and Computing (MOBIHOC), Annapolis, MD USA, June 2003.

Software Downloads:

For our simulations we are using ns-2 simulator version 1b9a, which is available on the web. Requests for actual scripts of our simulation should be sent to gtg217m@prism.gatech.edu

People:

- Ramanuja Vedantham (Student)

- Seung-Jong Park (Alumnus)

- Raghupathy Sivakumar (Professor)

References & Related Work:

- A. Campbell, C-Y. Wan, and L. Krishnamurthy, "PSFQ: A Reliable Transport Protocol for Wireless Sensor Networks," in Proceedings of ACM International Workshop on Sensor Networks and Architectures, Atlanta, Sept. 2002.

- S. V. Krishnamurthy, Z. Ye, and S. K. Tripathi, "A framework for reliable routing in mobile adhoc networks," in IEEE INFOCOM, 2003.

- J. Kulik, W. Heinzelman, and H. Balakrishnan, "An efficient reliable broadcasting protocol for wireless mobile adhoc networks," in IASTED Networks, Parallel and Distributed Computer Processing.

- S. Shenker, D. Ganesan, R. Govindan, and D. Estrin, "Highly-resilient, energy-efficient multipath routing in wireless sensor networks," Proceedings of ACM MOBIHOC '01, Oct 2001, pp. 251-253.

- Sze-Yao Ni, Yu-Chee Tseng, Yuh-Shyan Chen, and Jang-Ping Sheu, "The broadcast storm problem in a mobile ad hoc network," Proceedings of ACM MOBICOM, 1999, pp. 151-162.